This article is part of The Conversation’s series on drought. You can read the rest of the series here.

Water is a finite but crucial resource. In most river basins around the world, water is diverted for industrial, municipal and domestic consumption. It’s also a critical component of wetlands and other natural ecosystems that are of tremendous value to society. Worldwide, the bulk of water use is tied to agriculture – it accounts for approximately 66% of water diverted from natural sources for human use and 85% of water consumption. In the arid western United States, it’s not uncommon for irrigation to represent 75%-90% of all diversions.

Historically, much of the development that’s made these diversions possible in the US was subsidized by the federal government. This, together with water rights mechanisms that tend to preserve agriculture’s favored access to the water supply, has made water relatively inexpensive for agriculture. Few farmers have had much incentive to achieve greater efficiencies in their use of water for irrigation. As a result, the amount of water diverted for irrigation is about two to three times as much as is needed for crop production. On average, more than half of the water diverted for irrigation percolates into the groundwater or returns to surface streams without watering crops.

Globally, about 40% of the world’s total food supply comes from irrigated land; in the US, the irrigated fraction of our agricultural land has reached 18%, but this relatively small area produced half the total crop value. As the Earth’s population grows, demand for food will also grow. Only a tiny minority of the required increase in food production can come from expanding development of arable land, or by increasing the number and types of crops grown per year. The remaining must be met via yield increases and better water-use efficiency.

And as population increases, the demand for water for non-agricultural purposes will also grow. World water demand is projected to increase by 55% between 2000 and 2050, and most of this increase will come from manufacturing, electricity production, and urban and domestic use. So in a drier world, getting the amount of water used by irrigation under control is a necessity. New technologies might go a long way toward helping us reach that goal.

Using water or losing water?

Irrigation can “lose” water in several ways. Water can seep out of reservoirs or transmission canals before it ever gets to the field. After water is applied to the crop in the field, some of it can percolate into the groundwater system, where it’s no longer available to the roots of the crop, or it might run off the field altogether. Water losses that happen in the field are called “on-farm” losses. Total losses, including seepage from reservoirs, canals and so on, are called “system” loses.

Over the past 50 years, several technologies have been developed to decrease on-farm irrigation losses. Precision land-leveling uses laser-guided equipment to level the field so that water will flow uniformly into the soil, not run down any little hills or collect in little gullies. It makes it easier to limit the amount of water that seeps beneath where the roots of the crop can reach.

Compared to older, conventional furrow and flood application technologies, center pivot and other sprinkler methodologies and drip irrigation systems improve the uniformity of water application, reducing the amount of water lost to deeper percolation or runoff from the field. They’re expensive, though, and are done largely to reduce the costs of other inputs to production, such as labor. And they don’t necessarily result in significant improvements in overall efficiency, since they don’t address the losses that happen during storage, transmission and distribution of irrigation water.

Pinpointing water needs and availability

Monitoring and information technologies are emerging that show promise for reducing water losses at both the system and farm levels.

For example, it’s now possible to use a supervisory control and data acquisition (SCADA) system to provide precise, integrated control over an entire irrigation system, in real time. A SCADA system automatically measures the amount of water available in reservoirs, quantities of water flowing in canals, and amounts of water being diverted onto fields. SCADA systems also can be used to easily and remotely control releases from reservoirs, diversions into canals and so forth. The users of a SCADA system – typically reservoir and canal operators, but also individual farmers – can easily see where all the water is and how it’s being used, and they can make better decisions for what to do next with respect to releases and diversions. There are several successful examples of such systems, especially those based in internet communications and display of information.

Relatively few farmers or irrigation system operators currently use remote sensing as a source of information to reduce losses and improve irrigation efficiency. But this is likely to change as the cost of newly emerging technologies declines and as the information they produce becomes more readily available.

For example, it is possible now to use satellite imagery to estimate quantities of water used in individual irrigated fields almost anywhere in the world. Such a system, developed here at the Utah Water Research Laboratory at Utah State University, is now available in the Lower Sevier Basin of Utah. It is a website that allows irrigators to see a season-long summary of water use and the soil moisture status of their crop. It allows individual farmers to monitor water consumption within a field and do a better job of planning future irrigation timing and quantities. The system also allows canal and reservoir operators to monitor total water use in the areas served and better anticipate future irrigation demands for the entire system.

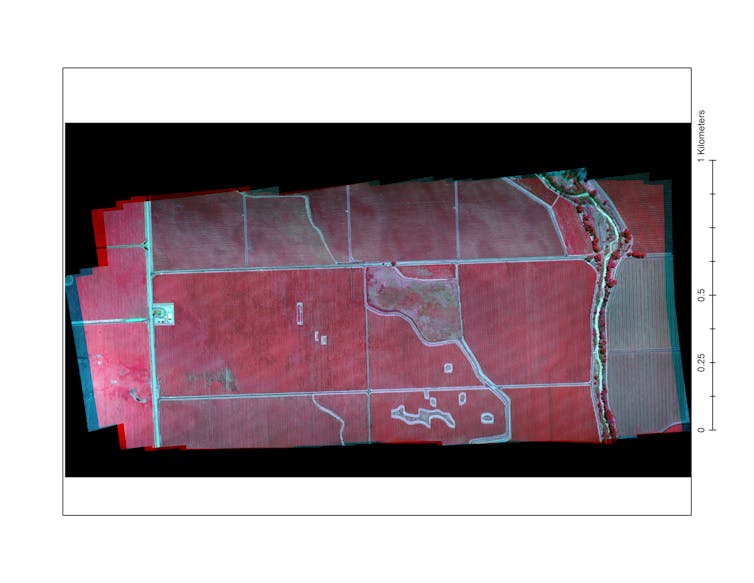

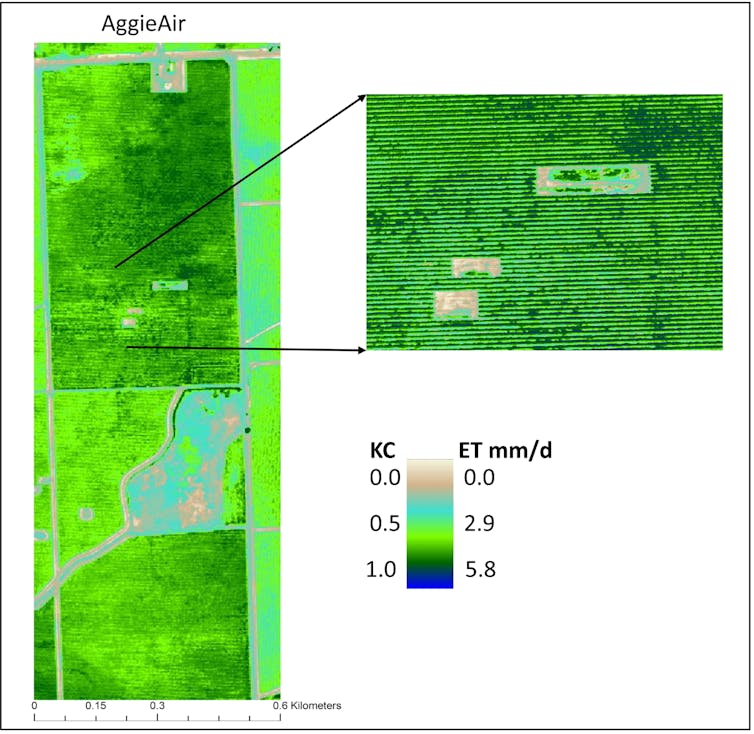

An exciting technology that’s in its infancy is the use of small unmanned autonomous systems (UAS) – or, more commonly, “drones” – to monitor agricultural systems, acquire scientific imagery and provide information for the operation of irrigation systems. Drones can also support more efficient fertilizer applications, weed and pest management, and harvesting. An example is the AggieAir UAS we’ve developed; we’re researching new methods to measure agricultural water use at very high spatial resolution: 15 centimeters (6 inches), as opposed to the coarse, 30-meter resolution of Landsat satellite-based monitoring.

If drone technologies can be made affordable, they could potentially provide very valuable information about when and where to apply precise quantities of water to the crop. Farmers with the right irrigation technology could use this information to accurately apply irrigation water at varying rates throughout the field rather than the same rate everywhere, which can lead to waste.

Managing in the future

As water demand increases, the competition for a fixed water supply will become more difficult to manage, especially in arid and semi-arid parts of the world and places where populations will grow rapidly. Since water use in irrigated agriculture is generally very inefficient, and since the economic value of water for agriculture is typically much lower that it is for cities and industry, there will be a natural trend to reduce water allocation to agriculture in favor of other uses. It’s important for water managers and policymakers to understand these trade-offs and how alternative schemes for water allocation will have economic, environmental and social impacts.

As climate change increases the uncertainty in future water supplies, water management institutions will need to operate with greater flexibility in order to respond effectively to shifts in both water demands and supplies. Remote sensing and information technologies will be two tools they can use to help fit agricultural uses into the larger, more complex total water puzzle.

Mac McKee receives funding from various Federal sources that support academic research institutions. These include USDA, NASA, and water and natural resources management agencies in the State of Utah.

Alfonso Torres-Rua receives funding from from various Federal sources that support academic research institutions. These include USDA, NASA, and water and natural resources management agencies in the State of Utah.

* This article was originally published at The Conversation

HELP STOP THE SPREAD OF FAKE NEWS!

SHARE our articles and like our Facebook page and follow us on Twitter!

0 Comments